Posted on December 16, 2017

by Doug Klugh

Imagine deploying and running your applications and services in an environment that is fully-automated, self-healing, and scaled based on incoming demand, in the blink of an eye, on a footprint smaller than any virtual machine (VM) on the market.

Technology companies, such as Google, have been doing this for well over a decade.

This platform is called a container and it is the biggest revolution in DevOps to date.

Running on containers facilitates the ability to deliver value quickly and adapt to change even faster. In other words, containers help you to be more agile. By integrating containers into your continuous integration (CI) pipeline, you can further automate your deployment process and greatly decrease time to market. And if something goes wrong, your system can detect, react, and fix the problem before your customers even realize there is a problem.

What is a Container?

A container is a stand-alone, executable package that includes everything it needs to run, such as code, runtimes, libraries, configurations, properties, etc. Running on a container isolates your software from its surroundings and the underlying infrastructure providing consistency and security between different environments, such as Dev, Test, UAT, Staging, and Production.Today, applications and services typically run on virtual machines (VMs), as opposed to running directly on physical servers. It is very common to run multiple VMs on a physical server, making more efficient use of server resources (CPU, memory, and disk) compared to running on bare metal. But the downside to VMs is that each requires a full installation of an operating system (OS). Not only does each OS consume resources on the underlying server, but there are capital expenses (CAPEX) and operating expenses (OPEX) associated with each instance. It adds security risks by increasing the attack surface and requires significant time and money for upgrading and patching.

Containers are a much more efficient solution compared to VMs. Containers are lightweight, isolated environments that run on top of a host OS kernel. So unlike VMs, containers don’t require installation of their own OS. While this facilitates even more efficient use of underlying server resources, one of the biggest advantages is the speed at which new containers can be spun up. Requests for new containers are typically fulfilled in less than one second (nearly instantaneously). Compare that to starting a new VM, which requires booting up its OS; taking a whole lot longer than a split second. Containers can be automatically created and executed to run additional instances of an application or service. This enables your system to automatically scale outward as traffic to your application or service increases and to self-heal as problems are encountered.

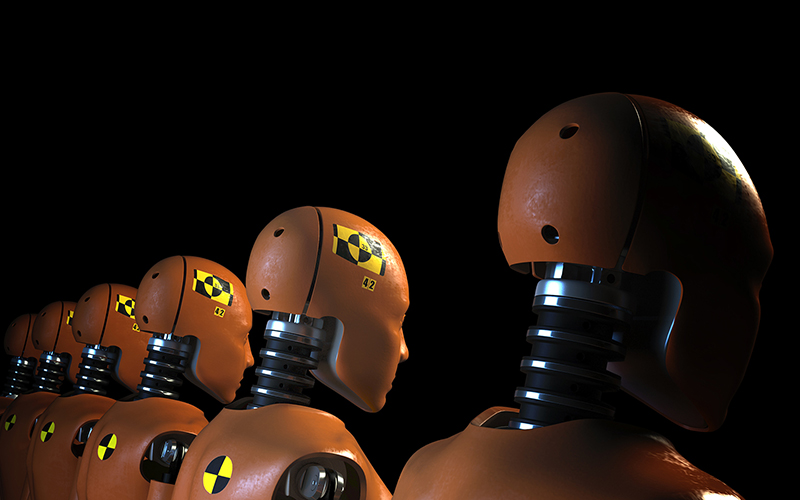

Cats vs. Cattle

Another important distinction between containers and VMs is commonly explained as a metaphor between pets and livestock. VMs are usually treated like pets. If someone’s cat gets sick, they typically spend significant effort, time, and money to nurse it back to health. This is similar to how operations treats virtual machines. When VMs get sick, or even slow down, operations typically provides a lot of attention to nursing them back to health. This is not the case with cattle nor containers. If a cow gets sick, a rancher will simply kill it, to prevent the spread of disease and minimize healthcare costs. And this is exactly how containers are managed. If a container gets “sick”, slows down, or becomes non-responsive, the container orchestration system will automatically kill it and spin up a new one. Containers are specifically designed to eliminate wasted effort, time, and money that would otherwise be spent nursing them back to health. This is automated by the orchestration platform and occurs nearly instantaneously.

OrchestrationContainer Orchestration is provided by a software system that automatically deploys, scales, and manages containerized applications. There are several orchestration platforms on the market, but the most widely used is Kubernetes. Kubernetes was spawned from an internal project at Google called Borg. Over a period of 15 years, Google developed and utilized Borg to manage containerized applications and services within billions of containers distributed around the globe. They were using this platform to manage all Google applications years before VMs became a wide-spread solution to virtualization. Borg was an internal secret at Google for many years and was later presented at a 2015 ACM conference and publicized in this Google paper.Google released Kubernetes as an open source platform in June 2014. It is governed by the Cloud Native Computing Foundation (CNCF) and is available on GitHub. Kubernetes is often referred using its numeronym, K8s. Containerized software is composed of multiple, interlinked containers; most likely spanning multiple hosts. Kubernetes defines all the different components and services that make up an application, including networking, message queues, APIs, etc. It then takes a pool of hosts, spins up all the required containers, deploys the right containers on the right hosts, and automatically scales as needed, with zero downtime. And when a system scales, this is where Kubernetes really shines. Most of us can only imagine the scalability challenges Google has on Google Search, let alone on all their other apps and services. |

|

Stateful or Stateless

As the adoption of containers steadily rises, there seems to be a growing misconception that containers are intended only for stateless applications or services. While containers excel at running stateless software, they are also very effective running monolithic, stateful apps that require data persistence.For example, Docker containers are persistent by nature. Data is retained when a container is stopped and is restored upon restarting the container. The only time data would be lost is when data is stored within a container and that container is destroyed; which is no different than a VM. However, if the container data is stored in a data volume, which is the preferred method for persisting data, it will persist even after the container is destroyed.

Containers go a long way to support scalable, self-healing, portable applications and services; whether they are stateful, legacy applications or modern, cloud-native applications and microservices.

Conclusion

To compete in today's markets, businesses must be able to make effective and efficient use of software. This means being able to quickly adapt software to changing business and market demands. A company that can manage this better than its competition is likely to dominate the market. But this is difficult given the rapid pace of technological change and the increasing cost and difficulty in finding talented software professionals who can deliver quickly, with high quality, and with high predictability.With the wide-spread adoption of DevOps principles, we are learning that automation is the key to decreasing time to market, raising quality, and delivering value when promised. Containerization goes a long way in realizing those principles and will revolutionize DevOps practices. Containers provide rapid, consistent, scalable, and secure deployment and execution of software directly supporting these capabilities. There is no doubt that containerization provides a distinct advantage to any organization relying on software.